Translating Paper into Trust

by Edward Vasko

Where developers build value for an organization; security builds trust.

Shannon Lietz

In my first post, I looked at the benefits of extending a culture of collaboration and trust across the organization. In this post, I want to look at how that collaboration can lead to security controls tailored to minimize their impact on job performance.

While writing about secure software development, Shannon Lietz pointed out that the security team does not always provide guidance to developers in a useful form. They produce policies, standards, and procedures that are simultaneously too detailed but not specific enough. It is difficult for developers to translate those papers into design requirements. She called for the security team to collaborate with the development team to “translate paper into code.”

I think we can go further. We should work to translate paper into trust by extending that collaboration to other business units. Comprehensive policies are necessary - particularly for compliance and audit purposes - but organizations need to translate those papers into security controls that enable every employee to do their job securely.

If an organization can prioritize reasonable controls to protect against the most damaging attacks and the most common threats, it can reduce the risk of data breaches, ransomware, financial fraud, and reputation loss.

Securing the trust of clients or customers begins with building trust between the security team and the rest of the organization. The entire organization needs to balance operational needs against security risks. Users must trust the security team to develop reasonable controls, and the security team must trust users to alert them to any changes that might introduce new risks. That collaboration must be based on the operational needs of each business unit, as well as the organization as a whole.

Organizational Needs

Like so many other writers, including Shannon Lietz, I’m going to use Abraham Maslow’s extremely useful hierarchy of needs, shown below, as a framework for discussion.

Maslow developed this hierarchy as a way to explain human motivation. He explained that basic physiological needs must be met before humans will expend much effort on higher-level needs. In other words, extremely hungry poets will spend their energy finding food rather than perfecting their art. Just like people, organizations need to concentrate on the basics first - the people, equipment, information, and funds needed to do the job. Once those are in place, they can move up to higher-level needs. Simply put, organizations don’t worry about database security, for example, until they have a database to secure.

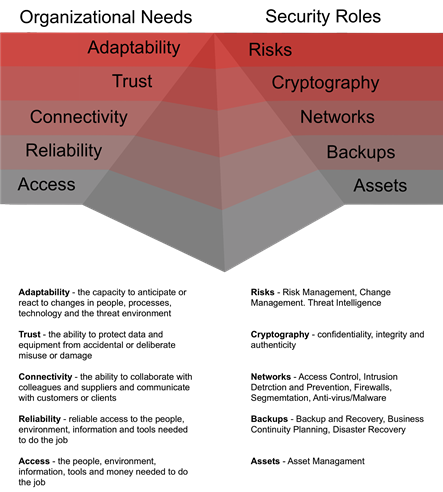

IT systems seem to introduce the most challenging risks. If we look at IT needs and the role of the security team in protecting the organization’s ability to meet those needs, and Organizational IT Needs Hierarchy might look something like this:

Email security is a useful example of an organizational need: every employee uses it, and most cyber attacks involve email, one way or another. As attacks have evolved from nuisance spam to attachments containing malware to sophisticated phishing or spoofing attacks, technical security controls have also evolved. The best solutions today are a blend of elements, such as spam filters, antivirus scanners, email authentication methods, such as SPF and DMARC, and adaptive security systems that use machine learning to flag suspicious messages.

These technical controls will never be able to block all malicious emails and they can accidentally block legitimate emails. They’ll need to be continually fine-tuned, within each organization, to block obviously bogus or malicious messages and flag suspicious messages so that users can choose to accept or report them.

No matter how good the technical controls are, users will also need the training to spot any suspicious messages that might slip through and practice drills to stay vigilant. Finally, the security team should also work with business units to develop procedures that will help prevent common risks. Given the rise in email-enabled payment fraud, for example, an organization’s finance team should make sure that they use appropriate payment procedural controls, such as dual control, verifying every request through a different channel, and a clear procedure for verifying and approving emergency payment requests.

The point is to take the guidelines and procedures and translate them into trusted procedures and controls that work for the users, particularly those in high-risk roles, like payment processing.

Working Together

The goal of the culture of collaboration is “usable security.” NIST’s Mary Theofanos may have described it best:

“Let’s make it easy for users to do the right thing, difficult to do the wrong thing, and easy to recover gracefully when the wrong thing happens.”

This is not a new idea; it’s been around since at least 1975 when Saltzer and Schroeder wrote The Protection of Information in Computer Systems. Usable security researchers have learned a lot over the last 35 years. If this field is new to you, you might consider reading User’s Are Not the Enemy, a 1999 paper on password security by Adams and Sasse. One of their conclusions struck a chord with me: if the security team does not educate the users about the threats they face and the protection that security controls provide, users will “construct their own model of possible security threats and the importance of security and these are often wildly inaccurate.”

In this respect, security behavior is a bit like social behavior. We would generally prefer that children not construct their own models of how to behave politely, but are rather taught how to avoid offending others by someone with more experience with the risks and benefits of the social environment. These lessons in behavior need to be specific to the child’s age and the values of the society the child lives in.

Similarly, security education for users may be based on general threat intelligence and established best practices, but for employees in high-risk positions, it should focus on specific threats and the controls available to prevent those threats. The training should rely heavily on the experience of the security team. In turn, the security team should turn to users to learn how security controls can be designed for better usability. Developing effective, usable controls must be a collaborative effort between the security team and the rest of the organization.

Edward Vasko, Senior VP of DevOps and Technology

Edward Vasko brings more than 30 years of diverse management, technical, and information security experience to drive Avertium’s overall technology strategy and platform integrations for target acquisitions. His ability to build high-caliber teams that can tackle the hardest cybersecurity challenges; and identify market opportunities that leverage service-wrapped offerings to provide value to clients have been celebrated by the industry and his peers.